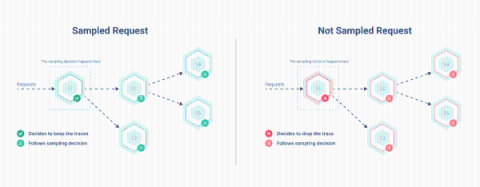

How to Keep Traces for Slow and Failed Requests

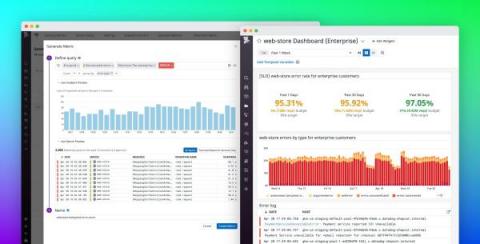

Today we are introducing Local Tail-Based sampling in Kamon Telemetry! We are going to tell you all about it in a little bit but before that, let’s take a couple minutes to explore what is sampling, how it is used nowadays, and what motivated us to including local tail sampling in Kamon Telemetry.